Check our latest summary of the article “EU AI act first regulation on artificial intelligence”. In this concise overview, we’ve distilled the key insights from the original 13-page act, saving you valuable time. Instead of spending approximately 35 minutes reading the full text, we’ve condensed the most important points into a three-minute read.However, we encourage you to read the complete act to gain a comprehensive understanding of the regulatory framework on artificial intelligence. Let’s dive into the highlights and provide you with basic knowledge of the upcoming legislation.

Table of Contents

Key Goals of EU AI Regulation

The European Union is pursuing a comprehensive approach to regulate artificial intelligence in order to harness its potential while ensuring its safe and ethical development and usage. The EU’s digital strategy focuses on creating favorable conditions for AI innovation.

The EU aims to establish AI legislation that prioritizes human interests and values. It seeks to create a framework that is trustworthy, can implement ethical standards, support jobs, help build competitive “AI made in Europe” and influence global standards.

EU AI Regulatory Framework and Key Elements:

- Parliament’s priority is to make sure that AI systems used in the EU are safe, transparent, traceable, non-discriminatory and environmentally friendly. AI systems should be overseen by people, rather than by automation, to prevent harmful outcomes.

- The Commission proposes to establish a technology-neutral definition of AI systems in EU law (largely based on a definition already used by the OECD) and to lay down a classification for AI systems with different requirements and obligations tailored on a ‘risk-based approach‘, which has been questioned.

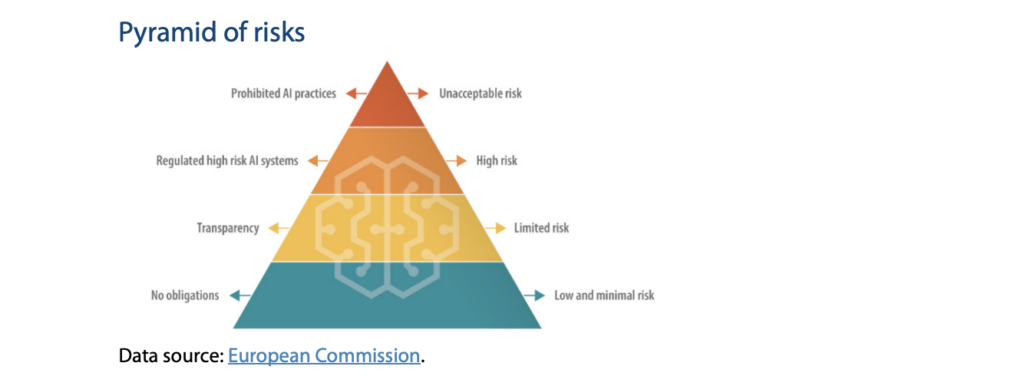

- Risk Classification:

- The act classifies AI systems into different risk categories, ranging from high-risk to low-risk.

- Some AI systems presenting ‘unacceptable’ risks would be prohibited.

- A wide range of ‘high-risk’ AI systems would be authorised, but subject to a set of requirements and obligations to gain access to the EU market.

- Those AI systems presenting only ‘limited risk’ would be subject to very light transparency obligations.

- Ebers and others stress that the AI Act lacks effective enforcement structures, as the Commission proposes to leave the preliminary risk assessment, including the qualification as high-risk, to the providers’ self-assessment.

- It’s important to note that the draft regulation does not apply to AI systems developed or used exclusively for military purposes, to public authorities in a third country, nor to international organisations or authorities using AI systems in the framework of international agreements for law enforcement and judicial cooperation.

- Human-Centric Principles: The AI systems used within the EU should prioritize safety, transparency, traceability, non-discrimination, and environmental friendliness. Human oversight is emphasized to prevent harmful outcomes.

- Data Strategy: The EU recognizes the significance of a robust data strategy to support AI development. It emphasizes the importance of data sharing, data spaces, and legislation to build trust.

Prohibited and High-Risk AI Practices:

- Unacceptable Risk Practices:

- Harmful AI practices that pose a clear threat to safety, livelihoods, and rights are explicitly prohibited. These include manipulative techniques, exploitation of vulnerable groups (for example, voice-activated toys that encourage dangerous behaviour in children), and intrusive biometric identification for social scoring.

- Some exceptions may be allowed: For instance, “post” remote biometric identification systems where identification occurs after a significant delay will be allowed to prosecute serious crimes but only after court approval.

- High-Risk AI Systems: AI systems with adverse impacts on safety and fundamental rights fall into high-risk categories. These include critical infrastructure management, education, employment, law enforcement, and more.

Requirements for High-Risk AI Systems:

- AI Act regulates ‘high-risk’ AI systems that create adverse impacts on people’s safety or their fundamental rights. The draft text distinguishes between two categories of high-risk AI systems:

1) AI systems that are used in products falling under the EU’s product safety harmonization legislation. This includes toys, aviation, cars, medical devices and lifts.

2) AI systems falling into eight specific areas that will have to be registered in an EU database:

- Biometric identification and categorisation of natural persons

- Management and operation of critical infrastructure

- Education and vocational training

- Employment, worker management and access to self-employment

- Access to and enjoyment of essential private services and public services and benefits

- Law enforcement

- Migration, asylum and border control management

- Assistance in legal interpretation and application of the law.

- High-risk AI systems must undergo an ex-ante conformity assessment and be registered in an EU-wide database before deployment.

- Any AI products and services governed by existing product safety legislation will fall under the existing third-party conformity frameworks that already apply (e.g. for medical devices).

- Providers of AI systems not currently governed by EU legislation would have to conduct their own conformity assessment (self-assessment), showing that they comply with the new requirements and can use CE marking.

- Only high-risk AI systems used for biometric identification would require a conformity assessment by a ‘notified body’.

- Compliance with various requirements, including risk management, testing, transparency, human oversight, cybersecurity, and data governance.

- Generative AI systems must disclose AI-generated content (What Every CEO Should Know About Generative AI by McKinsey & Co), prevent illegal content, and publish summaries of copyrighted training data.

Limited Risk and Low/Minimal Risk AI Systems:

- Limited-risk AI systems, such as systems that interact with humans (i.e. chatbots), emotion recognition systems, biometric categorisation systems, and AI systems that generate or manipulate image, audio or video content (i.e. deepfakes), would be subject to a limited set of transparency obligations – users should be made aware when they are interacting with AI.

- Low/minimal-risk AI systems can be developed and used without additional legal obligations. However, voluntary adoption of high-risk requirements is encouraged.

Governance, Enforcement, and Sanctions:

- Member States designate competent authorities for AI supervision. They would need to lay down rules on penalties, including administrative fines and take all measures necessary to ensure that they are properly and effectively enforced.

- European AI Board oversees regulation at the EU level.

- National surveillance authorities would be responsible for assessing operators’ compliance with the obligations and requirements for high-risk AI systems. They would have access to confidential information (including the source code of the AI systems) and be subject to binding confidentiality obligations.

- Administrative fines of up to €30 million or 6% of turnover for non-compliance can be substantial, depending on the severity of the infringement.

- Some commentators deplore a crucial gap in the AI Act, which does not provide for individual enforcement rights. Ebers and others stress that individuals affected by AI systems and civil rights organisations have no right to complain to market surveillance authorities or to sue a provider or user for failure to comply with the requirements.

In conclusion, the EU’s AI regulatory framework aims to strike a balance between fostering AI innovation and ensuring the ethical and safe use of AI technologies across various sectors. The proposed regulations focus on risk-based categorization, transparency, and accountability while striving to establish Europe as a leader in AI development.

Idego • Feb 01

Idego • Feb 01